Embracing "Usually Correct"

July 12th, 2025

TLDR

There are AI-skeptics that point to various "no code" waves of the past, and look at platforms like n8n and think the only difference

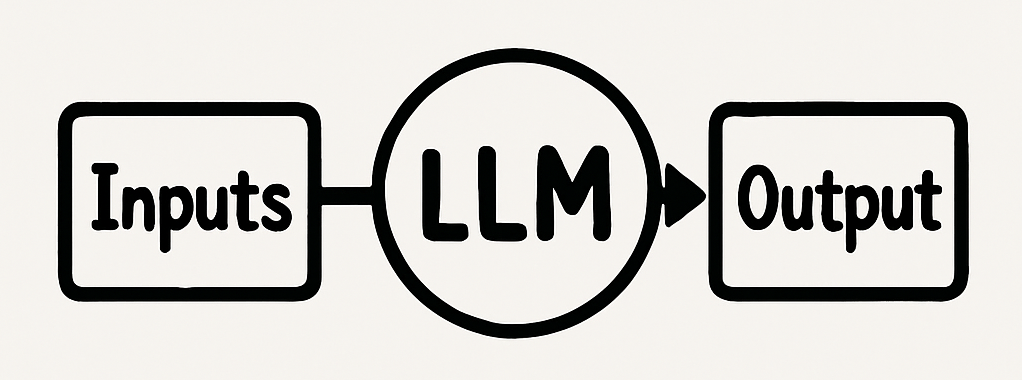

is hype. This time is different, because LLMs are opening up an entire world of problems to solve for software

developers. LLMs are going to "write", in realtime, the glue between systems and tools that until now were too non-deterministic - or stocastic to be handled before.

There's going to be plenty of work.

We've been trained well. Our code passes tests. If the tests pass today, they'll' pass tomorrow - unless somebody writes a regression. Every function returns the expected output or throws a predictable exception. Our code compiles or it doesn't. The application is in a binary world of deterministic correctness, we know it's not bug free - but the bugs are mistakes - they are anomolies where the code was implemented incorrectly, against the mythical spec. They are temporary, we are going to find them and fix them. This mindset has shaped how we think about problems worth solving in software.

Every business process falls into one of two categories - it's either deterministic (it can be fully specified) or it isn't. The deterministic processes have been automated. When a developer can sit down with stakeholders, map out inputs and outputs and the glue that connects them, it's almost always worth it to let software do it instead of people.

Business processes that aren't deterministic are the ones where it's basically too difficult to capture the rules connecting workflow inputs and outputs. People have tried to automate these processes, and there's been modest success - but often businesses fall back to instead having a few automated "sub-processes" glued together by a human-in-the-loop operator. It's just cheaper. The operator probably isn't perfect, and sure - total cost over the lifetime of the business might be higher - but it's less risk, less investment, and things work well enough.

ML really started to change this, but for specific tasks. Vision, NLP, and other classification tasks - probabalistic, not deterministic - got good enough and cheap enough that they were a better bet than the human operator, and way cheaper. In the 2010's they essentially ate that entire segment, moving those tasks into the automatable category.

Vague specs

As software developers we've learned to avoid messy, ambiguous problems because we can't guarantee they'll be solved correctly every time. When we have vague specs, we know we need to work hard to turn them into precise ones. Our training teaches us to recognize where vague specs can turn into precise specs, and where they can't. We learn to highlight the edge cases, and plan how to deal with them. If there are too many, or an infinite amount of "edge cases", then the process can't be automated - we quarantine the imprecision, automate the rest.

If you work with directly with stakeholders, you know that for most of them this differentiation isn't natural. How many times have you told a client, "I need precise specifications, this is too vague" and then proceeded to tease those specifications out of them. Non-software people just don't get this, because they don't live in a world that actually demands precision all the time. Edge cases aren't things that need to be driven out, they are things that people just adapt to in realtime. Usually they get handled well, sometimes not.

Consider building an agent that manages vendor relationships. It needs to parse contracts, negotiate terms, track deliverables, and escalate issues. A traditional approach would require codifying every possible scenario, creating rigid workflows that break when vendors behave unexpectedly. In the past, if we couldn't fully specify that process, we did't build it. Instead, we stuck to simpler CRUD apps and left the interesting problems unsolved. That's what we'd do here - the interesting part (the glue) would be left to a human operator, with the CRUD automating the book-keeping.

Slow glue / Fast glue

Why do we automate? Automation is cheap (to run), never tires, and it's fast. The parts of a business process that can't be automated are the slow parts, because they need to be done between 9-5, and they are done by a human. In businesses, it's not actually expected that these parts are executed perfectly, it's that in order to be "usually correct", a human's gotta do it. Software doesn't do "usually".

- Automated: Fast, don't tire, work the same way every time, cheap to run - but can't adapt in realtime and make judgement calls in new situtations.

- Human: Slow, tire, sometimes make errors, expensive - but CAN adapt in realtime and make judgment calls!

LLM-powered agents change this equation. They're probabilistic by design, built to handle uncertainty and context-dependent reasoning. An agent that correctly interprets vendor communications 85% of the time, gracefully handles edge cases is viable. The 15% uncertainty isn't a bug – it's the price of admission to solving a previously intractable problem. The price is already being paid by having a human operator.

This isn't about accepting lower quality. It's about taking a process that's expected to be "usually correct" and automating it. It's about suddenly being able to write software to glue inputs and outputs together where the rules change subtly in unpredictable ways.

- Agent: Fast-ish, doesn't tire, sometimes make errors, cheaper - but CAN adapt in realtime and make judgment calls! A hybrid.

Let me be clear: if your problem genuinely requires 100% correctness - financial calculations, safety-critical systems, security validations - LLM agents are absolutely the wrong tool. Use them where they don't belong, and you'll fail.

I'm also not talking about replacing an entire application with an agent. When a process is deterministic, it's cheaper (to run), faster, and more reliable to codify it old-school style. Maybe we get an AI assist in writing the code, but it's still code. Usually most parts of a process are deterministic, and some aren't. The sub-processes that aren't can be agentic, using an LLM to connect inputs to outputs (tool calls) - while the others remain regular code.

Opportunity

This creates an explosion of opportunity. We're not just going to write different software, we're going to write vastly more software. Problems that were too messy, too context-dependent, too human are now worth tackling. The demand for applications that can handle these previously impossible workflows is enormous. We've ignored and quarentined them for six decades!

Marc Andreessen predicted that "software will eat the world" – but maybe it couldn't quite manage the full meal with our deterministic tools. Now, with agents that can handle the messy, probabilistic parts of business and life, software might actually finish what it started.

Tools like Claude Code and Devin aren't going to eliminate the occupation of software development, they're giving us superpowers to move fast enough to keep up with the demand.

Some more of my work...

Ramapo College

Professor of Computer Science, Chair of Computer Science and Cybersecurity, Director of MS in Computer Science, Data Science, Applied Mathematics

Frees Consulting and Development LLC

My consultancy - Your ideas into real working solutions and product: building MVPs, modernizing legacy systems and integrating AI and automation into your workflows.

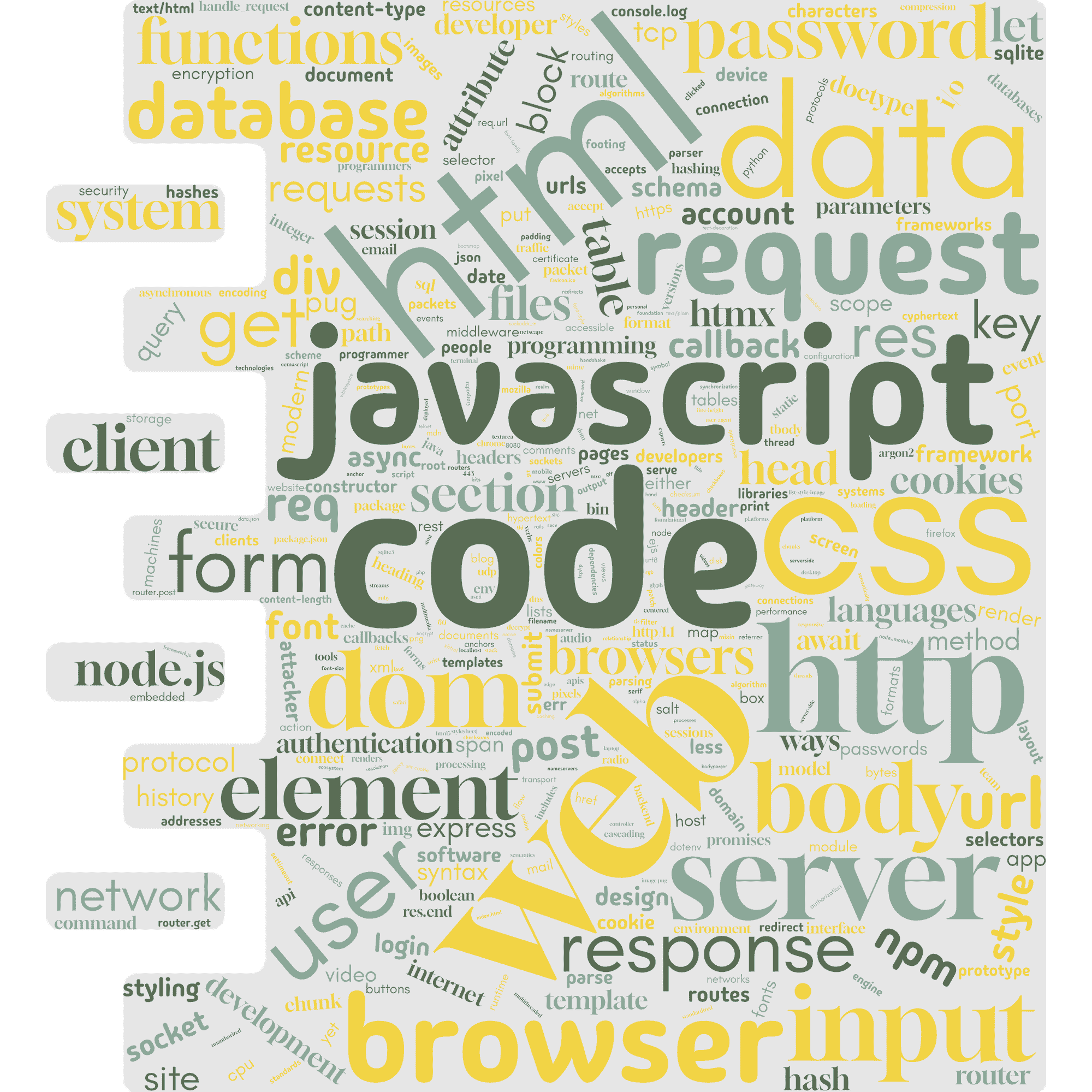

Node.js C++ Integration

My work on Node.js and C++ Integration, a blog and e-book. Native addons, asynchronous processing, high performance cross-platform deployment.

Foundations of Web Development

For students and professionals - full-stack guide to web development focused on core architectural concepts and lasting understanding of how the web works

The views and opinions expressed in this blog are solely my own and do not represent those of Ramapo College or any other organization with which I am affiliated.