Agentic Software Architecture: Changing MVC

June 13th, 2025

TLDR

While many devs worry about (or embrace) AI making their own work obsolete, they are missing a revolution in how software will actually be designed. Development is being turned on it's head, the MVC architecture that's dominated our thinking for decades is morphing into an Agentic Software Architecture. It's going to let apps do much more than they used to.

There's going to be plenty of work.

There's an entire new class of application and UX coming. The change is analogous to the transition from text-based terminals to 2D WIMP with windows and menus. The user interface is changing, and when that happens, what we do with software changes too.

What is UX anyway?

User Experience (UX) is about helping a user work with your application, and hopefully enjoy using it too. A UX designer's job comes down to three things:

- Figure out how to help a user understand what can be done with an app.

- Figure out how to let the user communicate what they want to do, in an easy and intuitive way that serves both novices and power users. (hint: not easy).

- Figure out how to communicate progress and results in an effective way.

User interface (UI) design and implementation is hard. There's things working against us:

- UIs are dumb, low bandwidth communication channels that need a lot of permutation as the application grows. They need to distill user intentions into discrete and unambiguous commands, so deterministic code can invoke the necessary application logic.

- UIs tend to be device dependent. We are interacting with applications through widgets like buttons and menus, their optimal layout and affordance changes depending on whether the user is interacting with a phone, tablet, laptop, large screen or AR interface.

- UIs need to be wired to application logic. UI code is event driven, and has different requirements than application logic. Things like responsiveness, feedback and progress. UI code needs to provide users ways of specifying all the parameters of their intended actions - beginners and power users alike. This all leads to a complicated mapping between discrete visual controls and core app logic.

Mappings between UI controls and application logic is rigid and deterministic - because that's how code works. People don't think linearly or deterministically, so communicating through UI controls is always a mapping exercise. UX designers try hard to make this mapping easy.

MVC

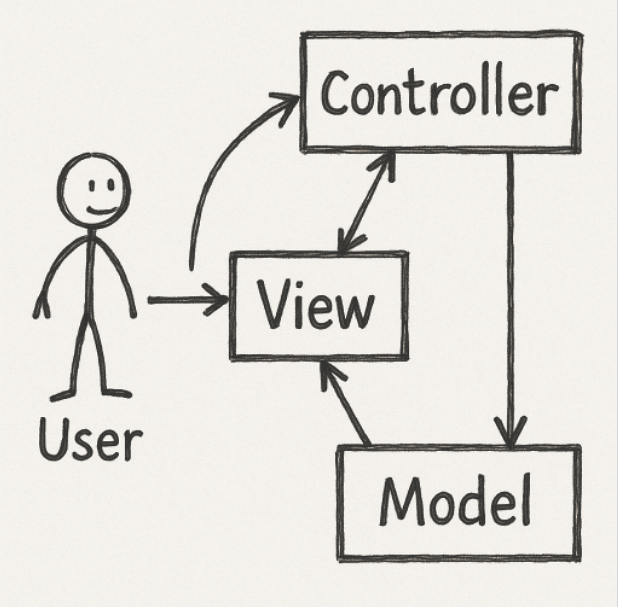

The MVC (Model-View-Controller) is a software architecture that separates concerns:

- Model: Manages data and business logic.

- View: Handles the user interface and presentation.

- Controller: Receives user input, updates the model, and selects the view.

MVC is really a way of organizing the architecture of an application so the UI code doesn't interfere and co-mingle with the code that drives the core of the application.

Separation of concern is always a great idea in software, but particularly so when talking about user interfaces - given their complexity and proclivity to change. The architecture has been around since the 1970's. It's seen many many permutations (HMVC, MVA, MVP, MVVM, and more), but it's pretty well established as the way we think about building maintainable software.

It's not going away, but I think it's getting updated a bit more. The reason: we don't need to limit ourselves to low bandwidth UI controls. The conversational UI is finally very possible.

Agentic UX

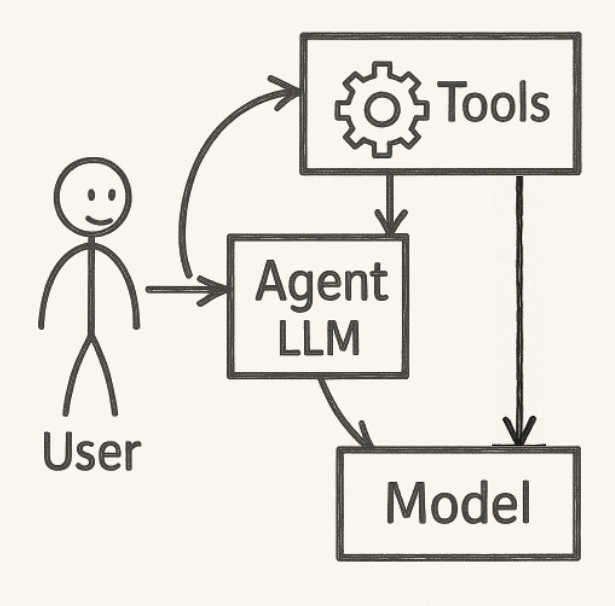

With Agentic UX the interface becomes natural language, since the LLM can effectively communicate with the user. The wiring is done by the LLM too - mapping it's game plan (based on the user's wishes) to it's knowledge and it's available tools.

Fundamentally, an agent and tools replace most of the View and Controller in MVC. We can move from the UI being a window through which users communicate their intentions and the application communicates the state of the model - into a full blown agent:

- An agent capable of accepting high level user intentions,

- An agent that can translate those intentions into the controller's capabilities (application logic), and even ask follow up questions when needed.

- An agent great at integrating results and communicating back to the user.

The effect is that traditional user interfaces melts away, replaced by pervasive agents with natural language interfaces; agents embedded right into the applications we work with.

Maybe in just a few years, the idea of users needing to learn a complicated UI - filled with menus, buttons, and various screens will feel as foreign as terminals feel to anyone who can't even remember Windows 95.

The agent becomes the user interface.

The Agent

There's really three parts to the "agent". I like to think of it as a combination of mind, body, and a set of tools.

- The parts that pulls in user prompts, system prompts, controls the chain of thought, invokes tools, and presents output is the control flow - the body - of the agent. The body's main job is to handle everything deterministic, and offload the thinking to the mind.

- The LLM is the mind. The LLM can be local or third party - but it's the part that allows the agent to make non-deterministic decisions, leading to conversations. It's also what integrates concepts - determining the right actions to take and the right tools to use.

- The agent can't do much but talk (or generate text) by itself. In order to take action, it needs to interact with application logic. The available application logic is the set of tools the agent has at it's disposal.

If an application has been designed with strict MVC separation in mind, the thinking about logic as tools is easier. The functions called to take action on your model are the tools. It's like converting a UI-driven application into one that also has a web API. The API endpoints are the tools.

What's a tool actually look like? For now, it's an API that can be invoked via the Model Context Protocol - MCP. In the future, every application will start by being an MCP server (or whatever becomes the dominant way to connect LLMs to tools).

- In 2025, we view an MCP server as an addon to an application, but I think moving forward the MCP server will be the bedrock of the application.

- In 2025, the MCP server is viewed as a way for other applications (agents) to talk to our application, but I think moving forward our own application's agent, which acts on behalf of our own users, is likely to be the primary user of our application's MCP tools.

I think the way we instruct agents to use tools today - largely though generic system prompts - is going to seem amazingly simplistic in a few years time. We'll see developers build highly customized agents for their own application that contain much more robust prompts and logic that help the LLM mind of their agent use their tools extensively. I think there's a huge frontier of application development opening up in this space.

Agentic Software Architecture

We need to start thinking about user-facing software differently, adopting what I'm calling (and I am sure I'm not the first one to use this phrase) Agentic Software Architecture - ASA.

ASA architecture doesn't throw away MVC, it modifies the View and Controller to become MBT - an Agent with a Mind, Body, and Tools crafted by the developer.

- Users interact with an Agent using natural (text, voice, maybe someday thought!) language.

- The Agent, containing a smart LLM mind and carefully developed tools, interprets the user's wishes, and makes use of tools to formulate a plan, take action, and produce results.

- The Agent communicates results back to the user though a combination of natural language and products - lists, documents, spreadsheets, images, videos - whatever was requested.

The UI is naturally multi-modal, conversational, and high bandwidth.

UX engineers will become agent builders - their purpose is the same, their methods very different. The architecture may kill the button / menu / dialog, but questions like "What will the user want to do" and "How can we present the user results" are still the right questions for UX designers. The difference is we'll be building and documenting tools that serve these anticipated needs, not clever user interface controls to expose them to people. We'll need to train LLM minds to work with our tools (APIs), instead of training people to use menus and buttons.

In 2025, it's tough to fully adopt this strategy. Replacing most of the UI of an app means a lot of token consumption. Local LLMs need a lot of resources, and non-local third party LLMs are too expensive to have every user interaction run through them - especially when you consider how tool usage burns up tokens. While LLMs are getting better every day, they aren't as accurate as deterministic buttons and menus just yet either (although when you factor in user error, it's not quite as clear).

This is going to change. Better LLMs mean more reliable tool usage, more accurate planning and execution. Cheaper (per token) LLMs means more power to use tools, to communicate iteratively with the user, and more saved context. Faster and more resource efficient LLMs mean quicker response times and local execution.

All of these things are coming, and they are all going to enable ASA even further. I'm imagining a future where an agentic UX is normal, in every app you use. A future where software developers think of agents like they think of UI frameworks today.

Note this is different than the vision where each of us have a single personalized agent acting on our behalf across applications. In this vision, each application has an agent-based UX that individuals interface with.

I personally think this is a more likely outcome, and a better one. The concept of everyone having their own agent interacting with the entire world on their behalf is attractive from a personalization standpoint, but it's a privacy and equity nightmare. It's also a single point of failure for everyone. The many agent approach democratizes access to artificial intelligence, without concentrating an individual's essence into one super-agent.

What does this mean for developers?

In 2025, and beyond - we need to start thinking of UX in three layers:

- Wiring up a UI for the user to communicate with an LLM - natural language (text, voice, and beyond). It will be more than just chat.

- Engineering agents that deterministically invoke and augment the LLM's capability, implement multi-tenant rules, guardrails, and other critical application features. They are going to be more than just for loops.

- Creating the tools layer of our applications - APIs carefully crafted and documented so an LLM can make use of them. MCP is probably just the beginning.

In the relatively short term, yes - AI coding tools are going to eat away at the need to low level, entry level coders. However, it's a mistake to view what's happening with AI as a career killer. We are about to see a complete revolution around what people expect from software, and how people expect to use it.

Existing software will need to be retrofitted, redesigned, and often completely rewritten to meet these expectations. We will be doing new things with software, as what is possible is changing. The volume of work, collectively, to be done is monumental, so if we get some help from developers called Claude and Devon, that's a good thing!

Some more of my work...

Ramapo College

Professor of Computer Science, Chair of Computer Science and Cybersecurity, Director of MS in Computer Science, Data Science, Applied Mathematics

Frees Consulting and Development LLC

My consultancy - Your ideas into real working solutions and product: building MVPs, modernizing legacy systems and integrating AI and automation into your workflows.

Node.js C++ Integration

My work on Node.js and C++ Integration, a blog and e-book. Native addons, asynchronous processing, high performance cross-platform deployment.

Foundations of Web Development

For students and professionals - full-stack guide to web development focused on core architectural concepts and lasting understanding of how the web works

The views and opinions expressed in this blog are solely my own and do not represent those of Ramapo College or any other organization with which I am affiliated.